Evan Tahler has used Porter at three different companies: Grouparoo (his own startup), Airbyte, and now at Arcade.dev - which just raised $12 million in seed funding led by Laude Ventures. We sat down with Evan to understand what makes Porter his go-to infrastructure management platform.

The Ultimate Endorsement: Choosing the Same Platform Three Times

Arcade.dev is the enterprise agent action platform that solves the authorization and integration challenges preventing 70% of AI agent projects from reaching production. They enable agents to safely access production systems like Gmail, Slack, and Salesforce with proper user permissions - something even ChatGPT can't do. As a fast-growing startup serving Fortune 500 financial institutions, Arcade faces the familiar challenge of scaling infrastructure without drowning in operational complexity.

What makes Arcade's infrastructure story unique isn't just their technical requirements - it's their Head of Engineering’s track record. Evan has now used Porter at three different companies: his own startup that was acquired by Airbyte, at Airbyte itself, and now as Head of Engineering at Arcade.dev. Most founders or CTOs might use a platform once and move on, but this pattern of bringing Porter to each new role isn't accidental.

"When I joined Arcade, I showed Sterling [Founding Engineer at Arcade.dev] Porter but really wanted him to decide - as he knew what would be best. You really want Kubernetes and full control, but the vast majority of the time you want Heroku-like simplicity. Porter gives you Heroku but with escape hatches." - Evan Tahler, Head of Engineering at Arcade.dev

ECS Complexity Was Holding Back Development

Before Porter, the Arcade team ran everything on ECS Fargate, an AWS managed service for application hosting. While Fargate initially provided simplicity, it quickly became a bottleneck as their AI tool platform evolved. The deployment process was painfully slow, taking far too long to redeploy and update applications. Even basic deployment patterns like blue-green releases required complex configuration involving additional AWS services like CodeDeploy, making the entire system feel fragile and over-engineered for their needs.

"ECS just takes a very long time to redeploy and update, and it was overly complex for what we needed. In order to configure Fargate for blue-green deployments the way we wanted, it was going to take another AWS service like CodeDeploy, and everything was very fragile." - Sterling Dreyer, Founding Engineer at Arcade.dev

The advantage of Porter comes from its integrated CI/CD approach. Porter handles the entire build-push-deploy pipeline through GitHub Actions integration, eliminating the need to configure and maintain separate CI/CD tooling. On Porter, deployments are as simple as merging to main. Furthermore, certain features that come out of the box with Porter, like blue-green deployments, require custom configuration across multiple AWS services when using Fargate.

Why Porter Beat the Alternatives Again

Sterling conducted a thorough evaluation, creating a formal decision matrix that they documented in their engineering docs. He also considered helm with ArgoCD on self-managed EKS, HashiCorp Nomad for container orchestration, Octopus Deploy for deployment automation, Spinnaker for continuous delivery, and the option of staying with ECS and working around its limitations.

After eliminating several options that didn't fit their needs, the final decision came down to two choices: ArgoCD on self-managed EKS or Porter.

The decision ultimately came down to Porter's unique positioning in the infrastructure landscape. Unlike pure PaaS solutions that can become restrictive as companies grow, or self-managed Kubernetes, which requires significant operational effort, Porter provides what Evan calls the "Heroku with escape hatches" approach. Most deployments can be as simple as merging to main, but when teams need to utilize more advanced Kubernetes features, the platform doesn't fight against customization.

Porter provisions and manages each cloud provider's native Kubernetes offering (EKS, AKS, and GKE) while abstracting away the operational complexity. For Arcade.dev, this meant accessing Kubernetes' container orchestration and scaling capabilities without requiring specialized expertise across the entire team. The abstraction also provides flexibility: teams can start with Porter's simplified interface and gradually adopt advanced Kubernetes features as needs evolve.

Sterling was able to self-serve Porter in a matter of days, first setting up their staging environment and then production. Fortunately, deploying to Porter is as easy as pointing to your repository.

“Setting up our production environment was as easy as copying the staging configuration through porter.yaml and making slight changes." - Sterling Dreyer, Founding Engineer at Arcade.dev

Advanced Infrastructure: Programmatic Deployment with Porter's API

Arcade.dev's platform requirements go beyond typical web applications. As the unified agent action platform, they enable thousands of enterprise agents to securely execute actions across production systems - from API calls to data processing to custom workflows. Their "Arcade Deploy" product must scale these agent workloads while enforcing granular permissions and maintaining the isolation that security teams demand.

"GitHub Actions and the CLI were too restrictive for what we needed. The Porter API solves a lot of our problems with how we deploy programmatically." - Sterling Dreyer, Founding Engineer at Arcade.dev

Using Porter's alpha version of an updated API, Arcade can now spin up instances dynamically for user-generated code execution while maintaining clear separation between their reliable core services and the ephemeral user workers. This programmatic approach enables them to provide high-quality hosting for AI tools, managing different classes of workloads with appropriate reliability and scaling characteristics.

"The API has been top-of-mind for me for the last month. It solves a lot of our problems with how to deploy things programmatically and have more refined control." - Sterling Dreyer, Founding Engineer at Arcade.dev

Current Setup: Efficiency and Future Planning

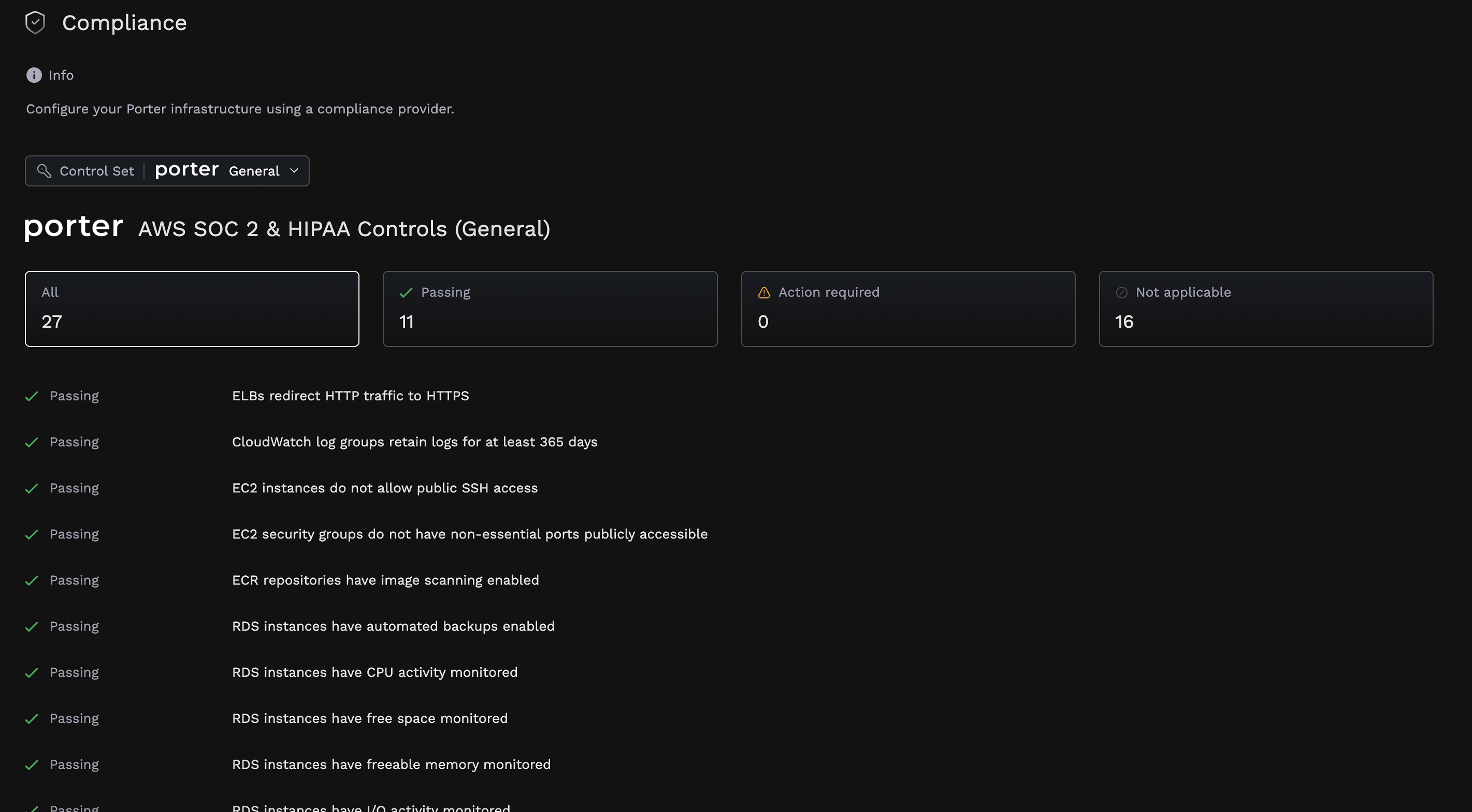

Arcade currently runs two Porter clusters - staging and production environments with high availability configured on production. The AI startup recently became SOC 2 compliant as well, utilizing Porter in the process. Porter has a one-click compliance feature which ensures compliance for all AWS infrastructure that is managed by Porter, including EKS, EC2, RDS, S3, and auxiliary services like Cloudwatch, allowing companies to meet all of the infra controls on compliance management platforms like Vanta, Oneleet, or Thoropass. Sterling was able to avoid the headache of configuring and constantly managing their infrastructure to be compliant.

Looking ahead, Evan and Sterling have several infrastructure initiatives where Porter continues to provide value. They're planning to utilize Porter-managed Kafka in the coming months, preferring Porter's upcoming offering to avoid the operational overhead of self-managing.

"We also don't want to manage Kafka ourselves. If Porter can handle setup and day 2 operations just like it has with app deployments on EKS, it means we don’t have to deal with the queue ourselves - then that takes more of the load off of us." - Sterling Dreyer, Founding Engineer at Arcade.dev

The team is also developing a fine-tuned embedding model that their services will interact with, choosing self-hosting over API calls for cost efficiency in their high-volume usage patterns (along with greater reliability and improved latency with the model being colocated alongside their application workloads). Arcade.dev plans to run these workloads using Porter Inference.

Additionally, Porter being cloud agnostic positions Arcade well for a multi-cloud strategy, particularly as they consider migrating some workloads to take advantage of competitive cloud pricing and credits.

What Makes a Platform Worth Repeat Usage?

After leveraging Porter across different companies and technical contexts, Evan has developed clear criteria for infrastructure tooling that's worth standardizing on. The platform must provide simple deployment workflows for day-to-day development while offering sophisticated capabilities when needed. Equally important is avoiding vendor lock-in through architecture that maintains customer control. Porter's approach of deploying everything into customers’ cloud accounts means teams always have an exit strategy.

Reliability through architectural design has proven valuable across all three of Evan's Porter implementations. "If Porter goes down, we're still fine," he emphasizes, highlighting how the platform's bring your own cloud deployment model separates Porter’s availability from customer application availability (although the platform itself did have three nines of availability over the past eight months). At Arcade, this pattern held even when Sterling conducted an independent evaluation, ultimately reaching the same conclusion.

Next Up